This blog series was jointly written by Tim McHale and Greg Scullard (Developer Advocates).

This is part 4 of a 4-part series. In part 3, we started to walk through an ERC-20 like application network using Hedera Consensus Service.

- Part 1: Permissioned networks with HCS

- Part 2: Decentralized application networks

- Part 3: Mapping an ERC-20 Token to HCS

- Part 4: Mapping an ERC-20 Token to HCS (continued)

Subscribing to a Mirror Node

The process of subscribing to a mirror node is simple with the SDKs. You connect to a mirror node, specify the Consensus Topic Id you’re interested in, optionally specify a time window limiting which Consensus Messages will be notified to you and you wait for the mirror node to stream messages that match these parameters.

This happens in the HederaMirror class.

First, we setup local variables such as the MIRROR_NODE_ADDRESS, which contains the mirror node’s API URL.

We also create a mirror client with this address.

/**

* Subscribes to a mirror node and handles notifications

*/

public final class HederaMirror {

private static final String MIRROR_NODE_ADDRESS = Objects.requireNonNull(Dotenv.load().get("MIRROR_NODE_ADDRESS"));

private static final MirrorClient mirrorClient = new MirrorClient(MIRROR_NODE_ADDRESS);

We then have a subscribe method which initiates the connection to the mirror node.

First, we check if there is a Topic Id to subscribe to, otherwise we exit

/**

* Subscribes to a mirror node and sleeps for a few seconds to allow notifications to come through

* The subscription starts from 1 nano second after the last consensus message we received from the mirror node

* @param token: The token object

* @param seconds: The number of seconds to sleep after subscribing

* @throws Exception: in the event of an error

*/

public static void subscribe(Token token, long seconds) throws Exception {

if (token.getTopicId().isEmpty()) {

return;

}

We then initialise the startTime for the subscription, this ensures we only receive new notifications. Indeed, if we have already processed some notifications yesterday, there is no need to process them again, the result of these notifications is already in our local state.

To do so, we use the LastConsensusSeconds and LastConsensusNanos we keep in our token object, and we add 1 nano second to ensure we don’t receive a duplicate of our last notification.

If this is the first time the application is run, the startTime will be 1 (1 nano second after epoch) meaning we will receive every notification since the start of the Topic Id, enabling us to catch up with the entire token history.

Instant startTime = Instant.ofEpochSecond(token.getLastConsensusSeconds(), token.getLastConsensusNanos() + 1);

We then start the mirror subscription itself. We specify the Topic Id we want notifications for, the start time and use a lambda expression to indicate what to do with each notification. In our case, we are calling a method called handleNotification, which we will detail below.

Note: we should also implement some kind of retry here, if the connection to the mirror drops for some reason, we should try to reconnect automatically. Here we just print the error to the console (Throwable::printStackTrace);), but a more robust solution would require the connection to be re-established if possible.

new MirrorConsensusTopicQuery()

.setTopicId(ConsensusTopicId.fromString(token.getTopicId()))

.setStartTime(startTime)

.subscribe(mirrorClient, resp -> handleNotification(token, resp),

// On gRPC error, print the stack trace

Throwable::printStackTrace);

Finally, we sleep for a few seconds to keep the subscription alive, a few seconds should be sufficient.

Note: as mentioned earlier in this document, a complete implementation would keep this subscription running in a separate thread, but for the purpose of this example, this is sufficient.

// After this sleep period, the subscription ends

Thread.sleep(seconds * 1000);

}

We are now set to receive notifications from the mirror node, but we need to be able to process these into state, let’s look at the notification handler first.

The handler takes a token object along with a MirrorConsensusTopicResponse object.

This MirrorConsensusTopicResponse object contains the notification from the mirror node itself, more specifically, it holds the message we sent for consensus, the consensusTimestamp allocated by Hedera along with the runningHash and sequence number for the Topic Id.

Note: we could check the runningHash and sequence here to ensure that we are not receiving a malicious message or missing a message in the sequence.

/**

* Handles notifications from a mirror node

* @param token: The token object

* @param notification: The notification data from mirror node

*/

private static void handleNotification(Token token, MirrorConsensusTopicResponse notification) {

We then parse the notification’s message to our Primitive message and finally get the signature and address.

We also can update our token’s last consensus timestamp so we don’t have to re-process this message in the future.

try {

Primitive primitive = Primitive.parseFrom(notification.message);

byte[] signature = primitive.getSignature().toByteArray();

String address = primitive.getPublicKey();

Ed25519PublicKey signingKey = Ed25519PublicKey.fromString(address);

// set last consensus time stamp

token.setLastConsensusSeconds(notification.consensusTimestamp.getEpochSecond());

token.setLastConsensusNanos(notification.consensusTimestamp.getNano());

And we process the response according to the primitive that is included in the message.

This is checking if we have a Construct primitive and extracts the primitive if it is the case.

Next, we verify the signature using the public key and print an error message and exit if it doesn’t

Finally, we call the construct method from the Primitives class to update local state.u

// Process response

if (primitive.hasConstruct()) {

Construct construct = primitive.getConstruct();

if ( ! Ed25519.verify(signature, 0, signingKey.toBytes(), 0, construct.toByteArray(), 0, construct.toByteArray().length)) {

System.out.println("Signature verification on message failed");

return;

}

Primitives.construct(token, address, construct.getName(), construct.getSymbol(), construct.getDecimals());

Notification processing (Apply consensus)

Getting close to the end, let’s now see how we process the messages that have passed consensus into local state.

This is managed by the Primitives class.

Let’s focus on the construct method here.

The constructor for the method takes the token object representing our local state, the owner address, the name of the token, its symbol and finally the number of decimals for the token.

This is all standard ERC-20 token inputs. In solidity, the owner address would be represented by msg.sender.

public static void construct(Token token, String ownerAddress, String name, String symbol, int decimals) throws Exception {

System.out.println(String.format("Processing mirror notification - construct %s %s %s", name, symbol, decimals));

We check if the local state in indeed clear or any existing token, and if it is, we proceed with setting the properties on the token object.

if (token.getName().isEmpty()) {

token.setName(name);

token.setSymbol(symbol);

token.setDecimals(decimals);

Then, we find the address corresponding to the ownerAddress and set its owner property to true so that we know who owns this token in the event we need to validate a function call such as burn is indeed requested by the token owner and not malicious actor.

Address address = token.addAddress(ownerAddress);

address.setOwner(true);

That’s it, we now have all the building blocks to constructing a token in an application on HCS.

We have a means of capturing user requests, submitting these requests to HCS for consensus, receiving notification that consensus has been reached, and finally a means to process these notifications into local state changes.

The complete example on GitHub includes a number of additional function calls such as mint and transfer.

Is it worth it?

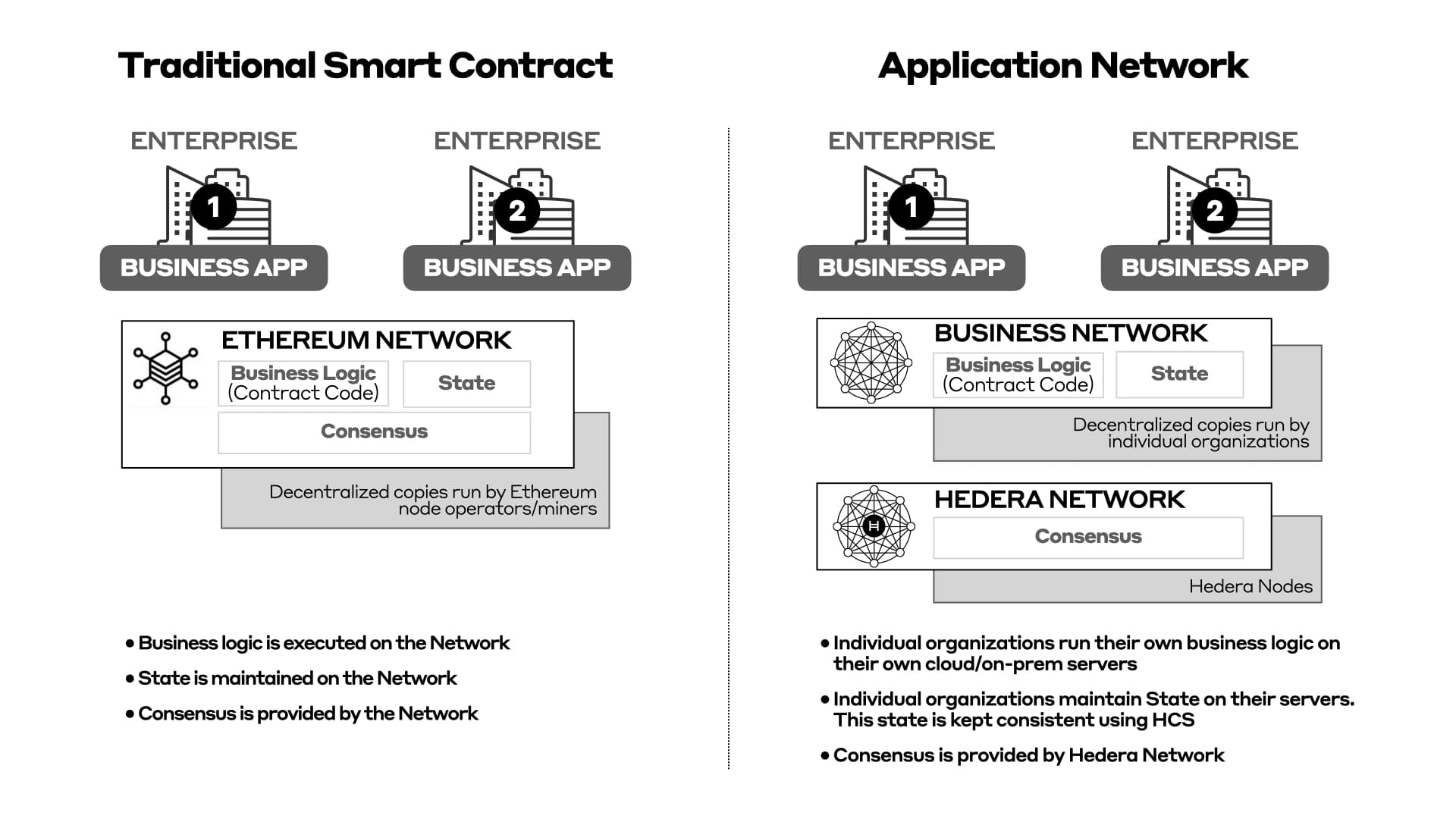

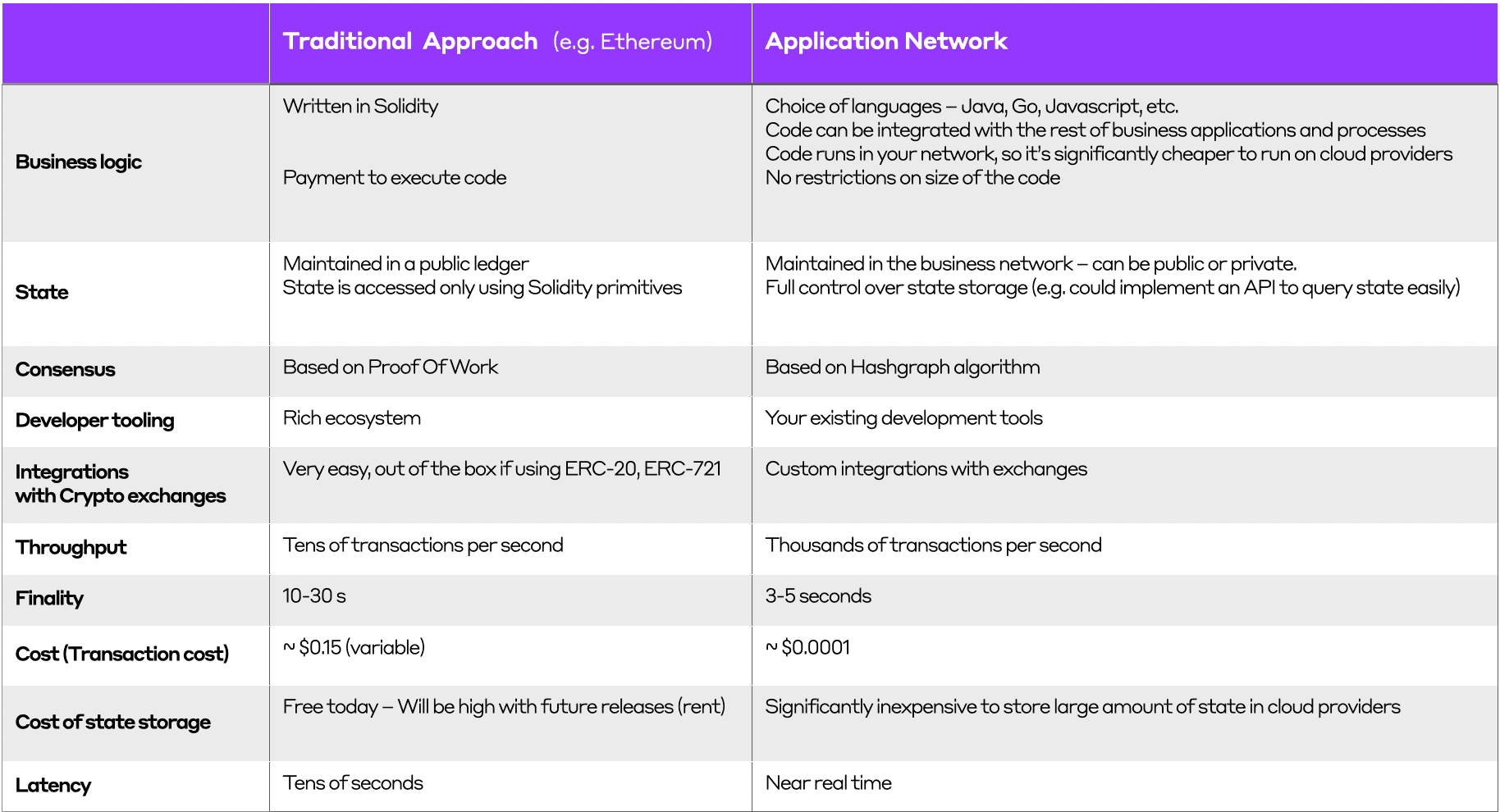

Before concluding, we wanted to compare and contrast the traditional smart contract approach to application networks on HCS.

With a traditional implementation, the network bears the bulk of the workload for the applications by taking responsibility for the execution of the code and storage of data. If there are thousands of applications vying for resources on the network, it will soon become a bottleneck with consequences on adoption, costs, etc.

With an application network, the Hedera network only provides the consensus, the remainder of the application (execution, storage) is delegated to external resources, leading to more consistent costs and scale that can’t be achieved with the traditional approach.

With an application network, developers are free to choose whichever language they wish, they can easily integrate the application into an existing ecosystem of applications.

Scale is no longer a barrier, neither are operating costs and the application benefits from Hedera consensus latency and finality.

Observations and conclusions

We hope this has given you food for thought and maybe encouraged you to start looking at how you could implement a token or any other logic using an application network on HCS.

If so, please consider the following:

- The application’s code must be 100% deterministic.

For example, it should not generate random numbers unless those are shared with other participants via a message. Individually generating a random number in response to a message would result in inconsistent state, likewise floating point operations or use of any data type that makes approximations and non-deterministic API calls to external systems are two other instances where local state might become inconsistent;

- Connecting to an existing Topic Id for the first time, you should request mirror to stream all consensus messages since the start. If however you have already participated and responded to messages (by way of acknowledgments), the application should make sure it doesn’t re-generate these acknowledgments that would not be expected by other participants.

One way to mitigate this (there may be others) is to make the application idempotent, that means that two acknowledgments are treated as one with no error or no duplicate entries in local state;

- When re-connecting to an existing Topic Id via a mirror node subscription, subscribe from the consensus timestamp of the last message your received + 1 nanosecond to avoid re-downloading every notification for nothing and receiving a duplicate for the last message.

Many thanks for making it this far, you definitely deserve a clap. Again, we hope this long blog post has encouraged you to further investigate how to build using HCS.

And remember, this is the early days for Hedera and the Hedera Consensus Service. We have a ton of work to do and standards for the community to set. If you’d like to be a part of that, be sure to join the developer Discord chat or provide suggestions for improvements using Hedera Improvement Proposals.